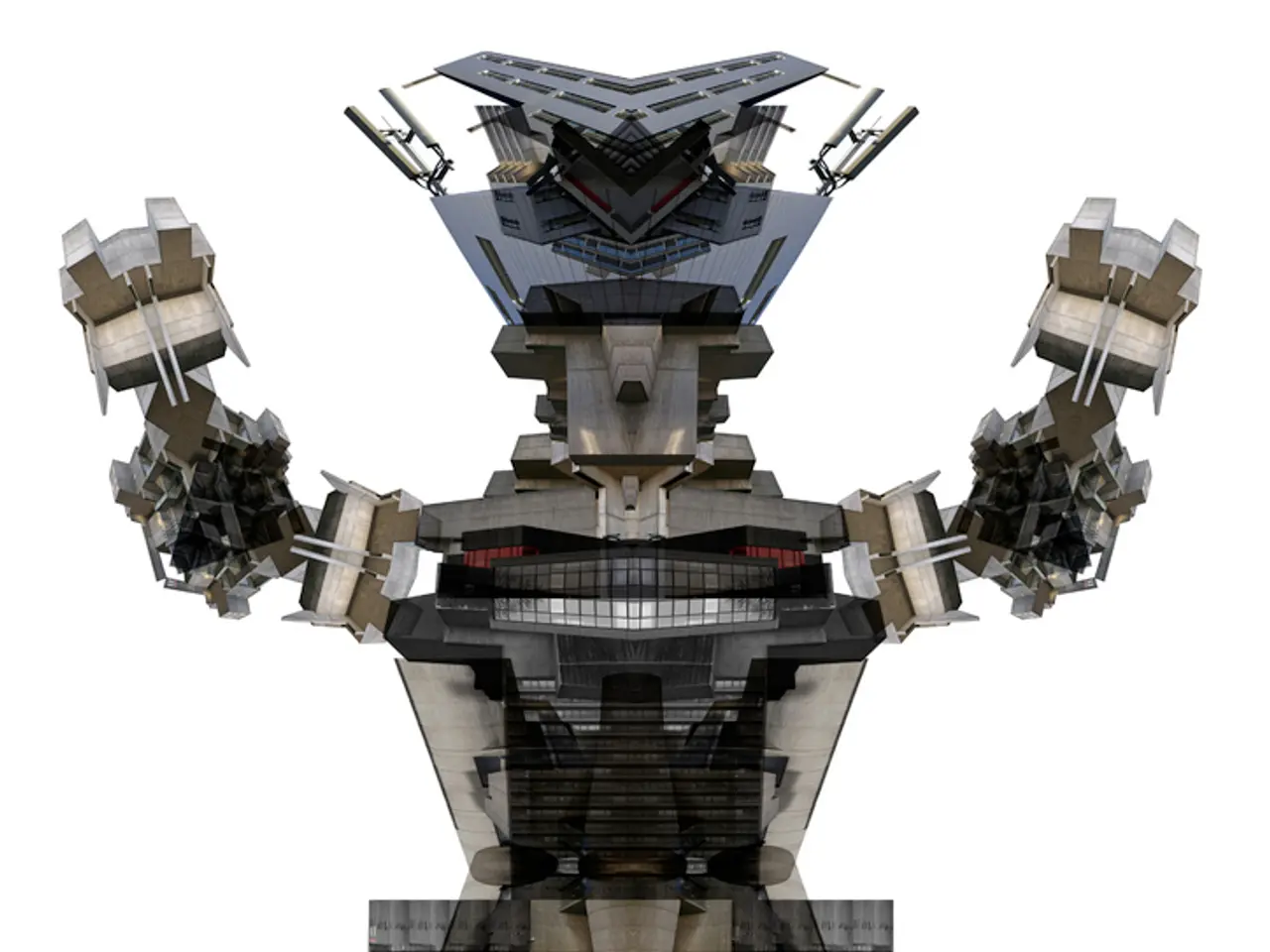

AI Robotics' Independence Divide

In the realm of artificial intelligence (AI), a significant divide, known as the autonomy chasm, poses a formidable challenge. This chasm is not merely a technological issue; it's a fundamental one that requires a rethinking of compute, reasoning, and embodiment from the ground up.

Current AI demonstrations often rely on teleoperation, with hidden human operators correcting failures. However, the vision of artificial general intelligence (AGI) assumes an AI capable of human-level reasoning, generalizing from few examples, understanding context perfectly, adapting to any situation, and scaling infinitely as algorithms mature. Achieving this vision, however, is far from simple.

The autonomy chasm represents a fundamental difference in problem complexity. For instance, current AI systems require a 700W GPU for operation, while the human brain achieves superior performance on just 20W. This disparity in efficiency is one of the key challenges that must be addressed.

Another challenge lies in the decision cycles. AI systems operate at a speed of 50-100ms, far slower than the sub-millisecond responses required for safe, adaptive interaction. Moreover, these systems tend to break down when facing novel objects outside their training distributions, highlighting the need for improved learning and reasoning capabilities.

Progress towards bridging the autonomy chasm can be made through layered approaches like hybrid autonomy, cloud-assisted autonomy, and fleet learning. However, it's crucial to avoid overspending on scaling production before solving efficiency bottlenecks.

Investors must be wary of overvaluing companies that conflate teleoperation with autonomy. Similarly, policymakers may underestimate the regulatory and safety frameworks required for systems that still fail unpredictably.

The implicit assumption that once algorithms mature, compute will scale infinitely, latency will vanish, and costs will plummet, is a risky one. The winners in this field will be those who manage the gap—capturing capital and momentum without overpromising, while investing in the breakthroughs that autonomy truly requires.

Leading companies working on neuromorphic chips and causal-based cognitive models for bridging autonomy gaps in robotics include Intel with its Loihi 2 chip, IBM with its TrueNorth chip, and Nvidia, which focuses on advanced AI chips though primarily using deep learning technology. Academic and research collaborations such as those at JKU in Austria are achieving breakthroughs with spiking neural networks on FPGA hardware, emphasizing energy efficiency and speed.

In short, humans possess a stack of capabilities evolved over millions of years, while machines replicate fragments, but at massive cost, higher fragility, and slower response. Until then, most demonstrations will remain teleoperated illusions of autonomy. The journey towards AGI is long, but the potential rewards are immense.