AI Specialists Diminish Bias within Algorithmic Systems, Maintaining or Boosting Precision

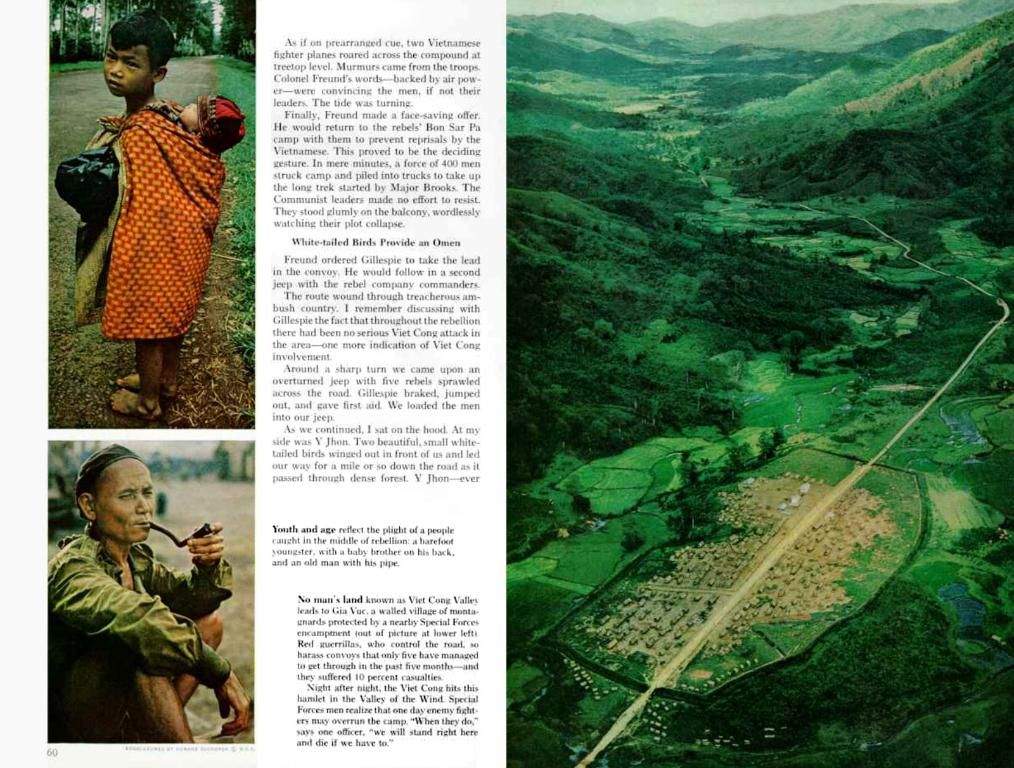

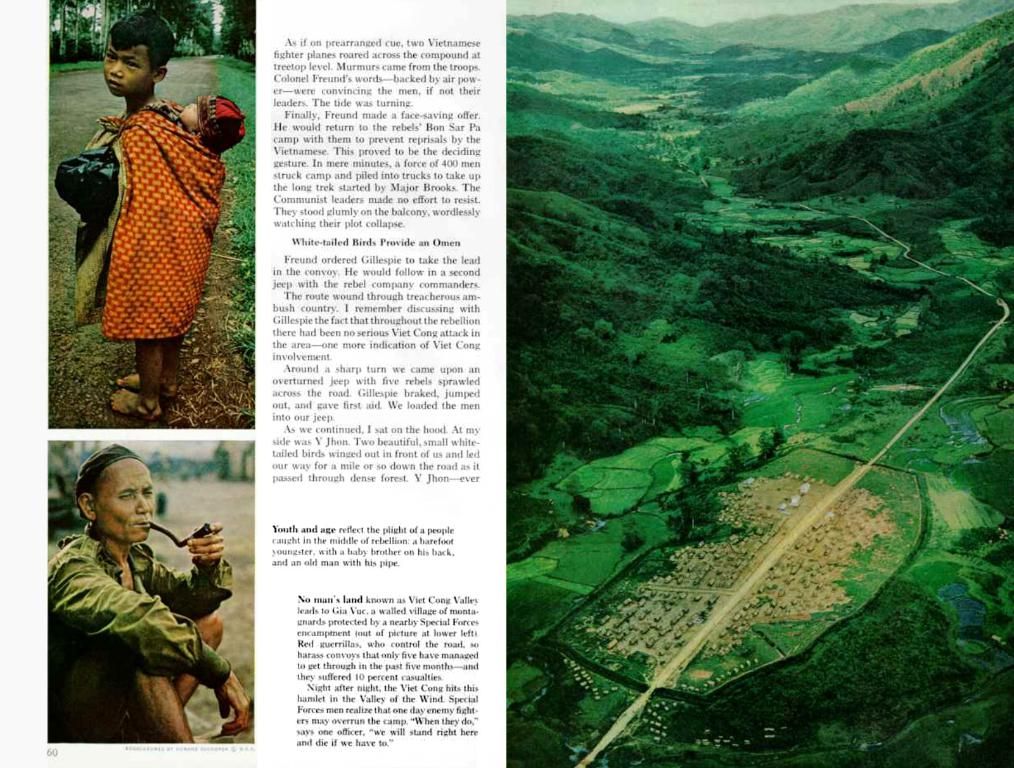

Machine-learning models can stumble when they attempt to make predictions for individuals who were underrepresented in the datasets they were trained on. For example, consider a model designed to suggest the best treatment for someone with a chronic disease, trained using a dataset dominantly male patients. This model may make incorrect predictions for female patients upon deployment in a hospital.

To enhance outcomes, engineers may try balancing the training dataset by weeding out data points until all subgroups are represented proportionally. While dataset balancing holds promise, it often requires eliminating large amounts of data, which can impact the model's overall performance negatively.

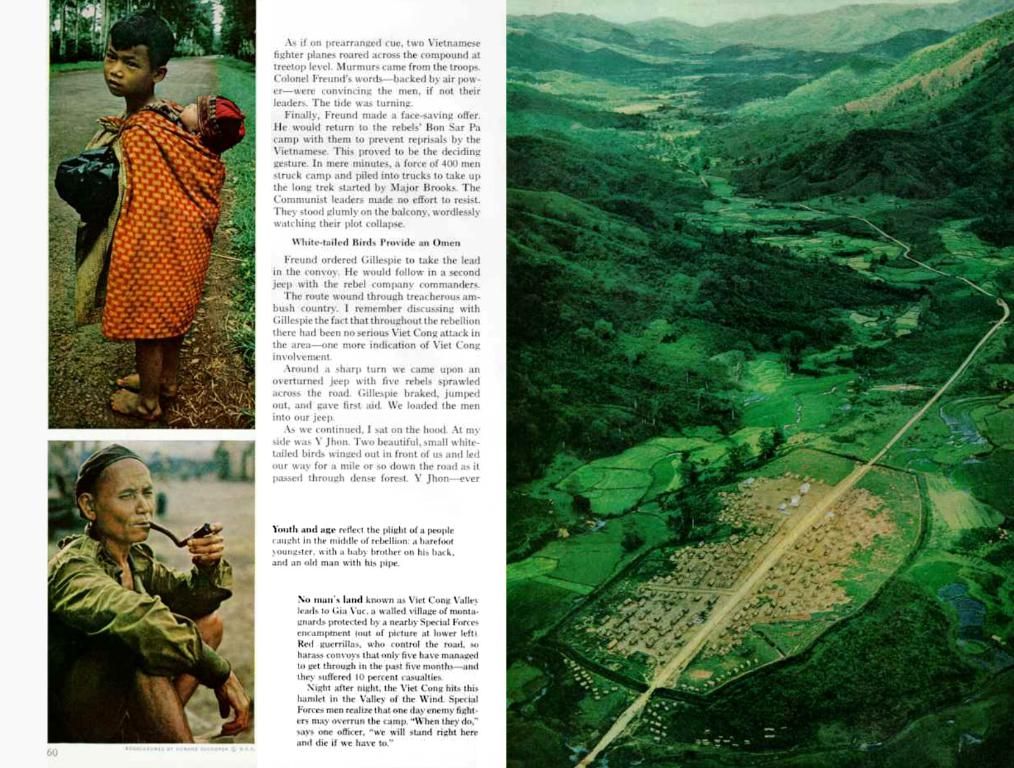

Researchers from MIT have devised a novel technique to pinpoint and eliminate specific problematic data points contributing to a model's failures on underrepresented groups. By removing fewer data points compared to other methods, this approach preserves the model's overall accuracy while boosting its performance regarding underrepresented groups.

This method can also identify hidden sources of bias in a training dataset that lacks labels, a common occurrence in many applications. This technique could combine with other tactics to improve the fairness of machine-learning models in high-stakes scenarios, potentially preventing underrepresented patients from being misdiagnosed by a biased AI model in the future.

"Many other algorithms attempt to tackle this issue by assuming each datapoint matters equally," explains Kimia Hamidieh, an electrical engineering and computer science graduate student at MIT and co-lead author of the associated research paper. "We are showing that this assumption isn't necessarily true. There are specific points in our dataset causing this bias, and we can pinpoint and remove those to achieve better performance."

The researchers' new method combines two concepts: identifying bad examples that impact a model's performance, often present in large internet-based datasets, and removing these problematic data points. This approach addresses "worst-group error," where a model underperforms on demographics represented in minority subgroups in a training dataset.

To achieve this, they took incorrect predictions the model made about underrepresented groups and used TRAK (an existing method that identifies the critical training examples contributing to a specific model output) to determine which data points contributed the most to the incorrect prediction.

Their method outperformed multiple techniques across three machine-learning datasets. In one instance, it improved worst-group accuracy while removing about 20,000 fewer training samples than the conventional data balancing approach. This technique also achieved higher accuracy than methods requiring altering a model's inner workings.

Since the MIT method focuses on changing a dataset rather than modifying the model, it could be easier for practitioners to apply to various types of models and multiple applications. It can also provide insights when bias is unknown because the subgroups in a training dataset are not labeled.

Researchers hope to validate and further explore this technique through future human studies. They also aim to improve the performance and reliability of the method, ensuring its accessibility and ease of use for practitioners working in real-world environments.

This work is co-funded by the National Science Foundation and the U.S. Defense Advanced Research Projects Agency.

- The new technique developed by researchers from MIT focuses on changing a dataset rather than modifying the model, making it more adaptable for various machine-learning models and applications.

- The MIT method addresses the issue of "worst-group error," where a model underperforms on demographics represented in minority subgroups in a training dataset, by identifying and removing problematic data points.

- By using TRAK, an existing method that identifies the critical training examples contributing to a specific model output, the researchers were able to determine which data points contributed to incorrect predictions about underrepresented groups.

- The MIT method outperformed multiple techniques across three machine-learning datasets, often requiring fewer training samples to be removed than conventional data balancing approaches, potentially enhancing the fairness of machine-learning models in high-stakes scenarios.